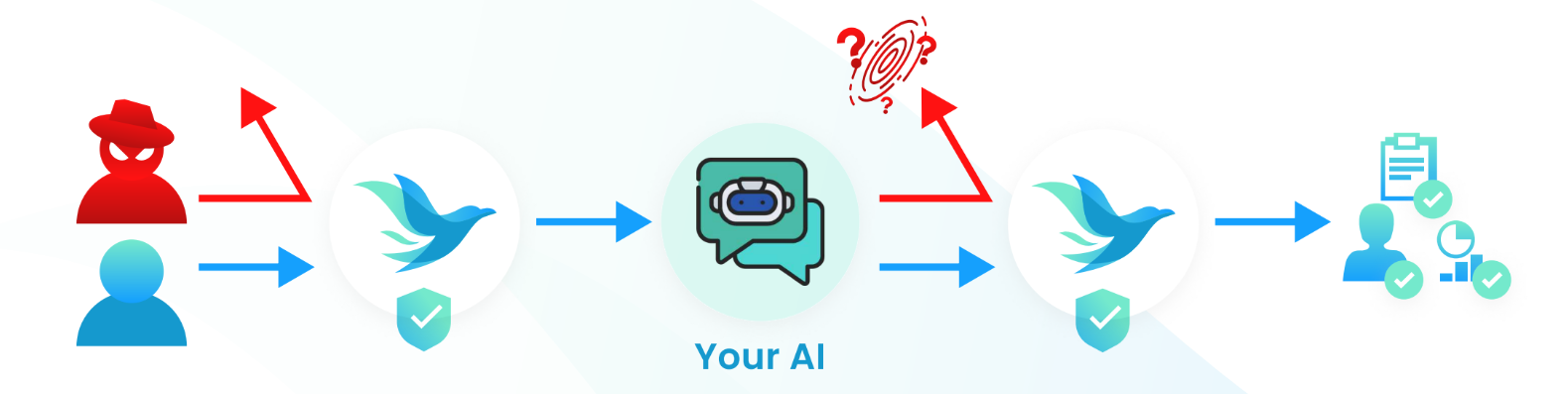

Guardrails (formerly Safeguards)

The Fireraven Guardrails can be accessed via the API. 3 endpoints are made available for you to access it.

Before implementing the Fireraven Guardrails, you should ensure that you configured the Topics (for the Input Guardrails) and the Metrics to measure on the chatbot response (for the Output Guardrails), the same way you would do it for the Secure Chatbot, here.

Creating a conversation

POST https://api.fireraven.ai/public/conversations

You first need to create a conversation to which the guardrails will be applied. Use this endpoint to create a conversation used to store and organize your user conversations.

A good management of conversations makes the summaries and analytics clearer for later. It is recommended to use this endpoint to create a new conversation for each user that access your LLM or chatbot.

Query parameter

clientId string (required)

Indicates in which chatbot the conversation should be created. This directly impacts how messages are handled since each chatbot can be configured differently.

Body parameters

name string or null (optional)

The name of the conversation. Useful if you want to organize your conversation based on their name.

Default : API Conversation {Date ISO8601}>

description string or null (optional)

The description of the conversation.

Returns

Metadata of the conversation

id string

The unique identifier of the conversation.

name string

The name of the conversation.

description string

The description of the conversation.

createdAt string

The ISO8601 date string of when the conversation was created.

isClient boolean

If the conversation is a client conversation. Every conversation created via the API is a client conversation.

Example

curl -X 'POST' \

'https://api.fireraven.ai/public/conversations?clientId=<your-clientId>' \

-H 'X-Api-Key: <your-apikey>' \

-H 'Content-Type: application/json' \

-d '{

"name": "My first conversation",

"description": "My first conversation created from the Fireraven API"

}'

{

"id": "00000000-0000-4000-0000-000000000000",

"name": "My first conversation",

"description": "My first conversation created from the Fireraven API",

"createdAt": "1999-12-31T00:00:00.000Z",

"isClient": true

}

Input Guardrails (analytics)

POST https://api.fireraven.ai/public/safeguard/input

Execute the Fireraven Input Guardrails. This analyzes the input from the user to filter if it should be allowed, if it matches a Safe Topic and a safe question, or if otherwise if should be blocked because it is included in an Unsafe Topic or if it is out of topic. It returns the result of the guardrail for every request from the user.

Query parameter

conversationId string (required)

Indicates in which conversation the message should be stored after analysis. This conversationId can be obtained from the /public/conversations endpoint.

Body parameters

messageHistory array (required)

Indicates the history of the conversation, used as context for the last message. Order of the messages for a history of size n should be as follows: index 0 is the oldest message to consider, n is the newest message in the conversation and the one who should be evaluated as input.

{

"role": "string", // Role of the message. Value is `user` or `system`

"content": "string" // Content of the message

}

Returns

Result of the input analysis

original object

The original message saved to the conversation. This is the last message of the messageHistory input body parameter.

{

"id": "string", // Unique identifier of the message

"direction": "string", // Direction of the message. Either Ìncoming (system) or Outgoing (user)

"status": "string", // Status of the message (saved)

"content": {

"text": "string", // Text content of the message

"summaryWithContext": "string" // The summarized question which includes information from the context, the other messages included in the `messageHistory` input body parameter.

}

}

summarized string

The summarized question which includes information from the context, the other messages included in the messageHistory input body parameter.

result array

The result of the analysis showing every topic and question found to match the message.

{

"topic": "string", // Name of the topic

"state": "string", // State of the topic (safe, unsafe, etc.)

"sentences": [

{

"id": "string", // Unique identifier of the sentence (question)

"text": "string", // Text of the sentence (question)

"similarity": 0 // Similarity between the question of the user and the closest question in the Input Guardrails (between 0 and 1)

}

]

}

allowed string

The recommended decision if the message should be allowed to be sent to the LLM or not.

Example

curl -X 'POST' \

'https://api.fireraven.ai/public/safeguard/input?conversationId=<your-conversationId>' \

-H 'X-Api-Key: <your-apikey>' \

-H 'Content-Type: application/json' \

-d '{

"messageHistory": [

{

"role": "system",

"content": "Hi! How can I help you?"

},

{

"role": "user",

"content": "What is the weather today?"

}

]

}'

{

"original": {

"id": "00000000-0000-4000-0000-000000000000",

"direction": "Outgoing",

"status": "Saved",

"content": {

"text": "What is the weather today?",

"summaryWithContext": "What is the weather today?"

}

},

"summarized": "What is the weather today?",

"result": [

{

"topic": "The weather",

"state": "safe",

"sentences": [

{

"id": "00000000-0000-4000-0000-000000000000",

"text": "What is the weather today in the city?",

"similarity": 0.75

}

]

}

],

"allowed": true

}

Output Safeguard (metrics)

POST https://api.fireraven.ai/public/safeguard/output

Execute the Fireraven Output Guardrails. This analyzes the input and output of an exchange and gives metrics on the output message.

You have to specify the metrics you want to apply from the Fireraven Dashboard. You can see how to configure and create the metrics you want to apply on your LLM responses here.

Query parameter

conversationId string (required)

Indicates in which conversation the message should be stored after analysis. This conversationId can be obtained from the /public/conversations endpoint.

Body parameters

inputId string (optional, but recommended if you are using the Input Guardrails also)

The unique identifier of the input message, if the message has already been registered in a conversation. The message has to belong to the same conversation as the one specified as a query parameter (conversationId).

input string (optional)

If no inputId is available, this parameter is used instead. If this parameter is provided alongside an inputId, this parameter is skipped. This is the text content of the message that triggered the output parameter. This text will be registered in a new message in the conversation.

Even though inputId and input are marked as optional, you have to provide at least one of them.

It is advantageous to provide the ID of the messages already registered as input and output. It makes the analysis of your inputs and outputs more cohesive in the dashboard.

outputId string (optional)

The unique identifier of the output message, if the message has already been registered in a conversation. The message has to belong to the same conversation as the one specified as a query parameter (conversationId).

output string (optional)

If no outputId is available, this parameter is used instead. If this parameter is provided alongside an outputId, this parameter is skipped. This is the text content generated by the LLM in response to the input.

Even though outputId and output are marked as optional, you have to provide at least one of them.

Returns

Result of the output analysis.

id string

The unique identifier for this analysis.

inputMessage object

The input message that was either found using the inputId or created from the input body parameters.

{

"id": "string", // Unique identifier of the message

"direction": "string", // Direction of the message. Either Ìncoming (system) or Outgoing (user)

"status": "string", // Status of the message (saved)

"content": {

"text": "string", // Text content of the message

"summaryWithContext": "string" // The summarized question which includes information from the context, the other messages included in the `messageHistory` input body parameter.

}

}

outputMessage object

The output message that was either found using the outputId or created from the output body parameters.

{

"id": "string", // Unique identifier of the message

"direction": "string", // Direction of the message. Either Ìncoming (system) or Outgoing (user)

"status": "string", // Status of the message (saved)

"content": {

"text": "string", // Text content of the output message from the LLM

"summaryWithContext": "string" // The processed output message, by the Output Guardrails

}

}

metricResults array

Array of objects each containing the result of a metric.

{

"metric": "string",

"value": 0

}

{

"metric": {

"id": "string", // Unique identifier of the metric

"name": "string", // Name of the metric

"description": "string", // Description of the metric

"criticality": "string", // Criticality level of the metric, can be CRITICAL, HIGH, MEDIUM, LOW, NONE

"detectionThreshold": "number", // At which point an issue is detected for the metric, value between 0 and 1

"detectionIsAboveThreshold": "boolean", // If an issue was found (detection over threshold) or not for the metric

"isDefault": "boolean", // If the metric is one of the default ones offered by Fireraven

"isArchived": "boolean", // If the metric is archived

"createdAt": "date", // The date of creation of the metric

"updatedAt": "date", // The date of the last update of the metric

"archivedAt": "date", // The date at which the metric was archive

},

"status": "string", // The status of the metric, can be SUCCESS (if there's no issue), FAILED (if there's an issue), UNKNOWN

"value": "number", // The detection value of the metric

"isIssue": "boolean", // If there's an issue

},

createdAt string

ISO8601 representation of the time at which this analysis was created.

Example

curl -X 'POST' \

'https://api.fireraven.ai/public/safeguard/output?conversationId=<your-conversationId>' \

-H 'X-Api-Key: <your-apikey>' \

-H 'Content-Type: application/json' \

-d '{

"inputId": "00000000-0000-4000-0000-000000000000",

"output": "The weather forecast is 24°C with a mix of sun and cloud for the day."

}'

{

"id": "00000000-0000-4000-0000-000000000000",

"inputMessage": {

"id": "00000000-0000-4000-0000-000000000000",

"direction": "Outgoing",

"status": "Saved",

"content": {

"text": "What is the weather today?"

}

},

"outputMessage": {

"id": "00000000-0000-4000-0000-000000000000",

"direction": "Incoming",

"status": "Saved",

"content": {

"text": "The weather forecast is 24°C with a mix of sun and cloud for the day."

}

},

"metricResults": [

{

metric: {

id: '00000000-0000-4000-0000-000000000000',

name: 'Financial advice',

description: 'The text contains a financial advice.',

criticality: 'CRITICAL',

detectionThreshold: 0.1,

detectionIsAboveThreshold: true,

isDefault: false,

isArchived: false,

createdAt: '1999-12-31T00:00:00.000Z',

updatedAt: '1999-12-31T00:00:00.000Z',

archivedAt: null

},

status: 'SUCCESS',

value: 0.9546173468312323,

isIssue: true

},

...

],

"createdAt": "1999-12-31T00:00:00.000Z"

}